Use Tealium to Stream Data to Snowflake, Databricks, or Any Cloud Your AI Models Will Understand

If your business still relies on Google Analytics, Adobe Analytics, or any other “after-the-fact” analytics platform as your primary data collection mechanism for your cloud data warehouse, you’re doing it backwards.

Analytics tools were designed for reporting, not for powering modern data and AI strategies. Their fixed schemas, batch processing, and rigid naming conventions introduce cost, delays, and create confusion that frustrate data teams and limit comprehension of AI models.

The Problem With Analytics-First Data Collection

When you collect data through analytics tools, you:

- Limit your visibility to what the platform measures (pageviews, events, conversions) while missing the full customer journey and offline signals.

- Accept latency as the norm, waiting hours or even days for data to be processed, batched, and distributed before downstream teams can use it.

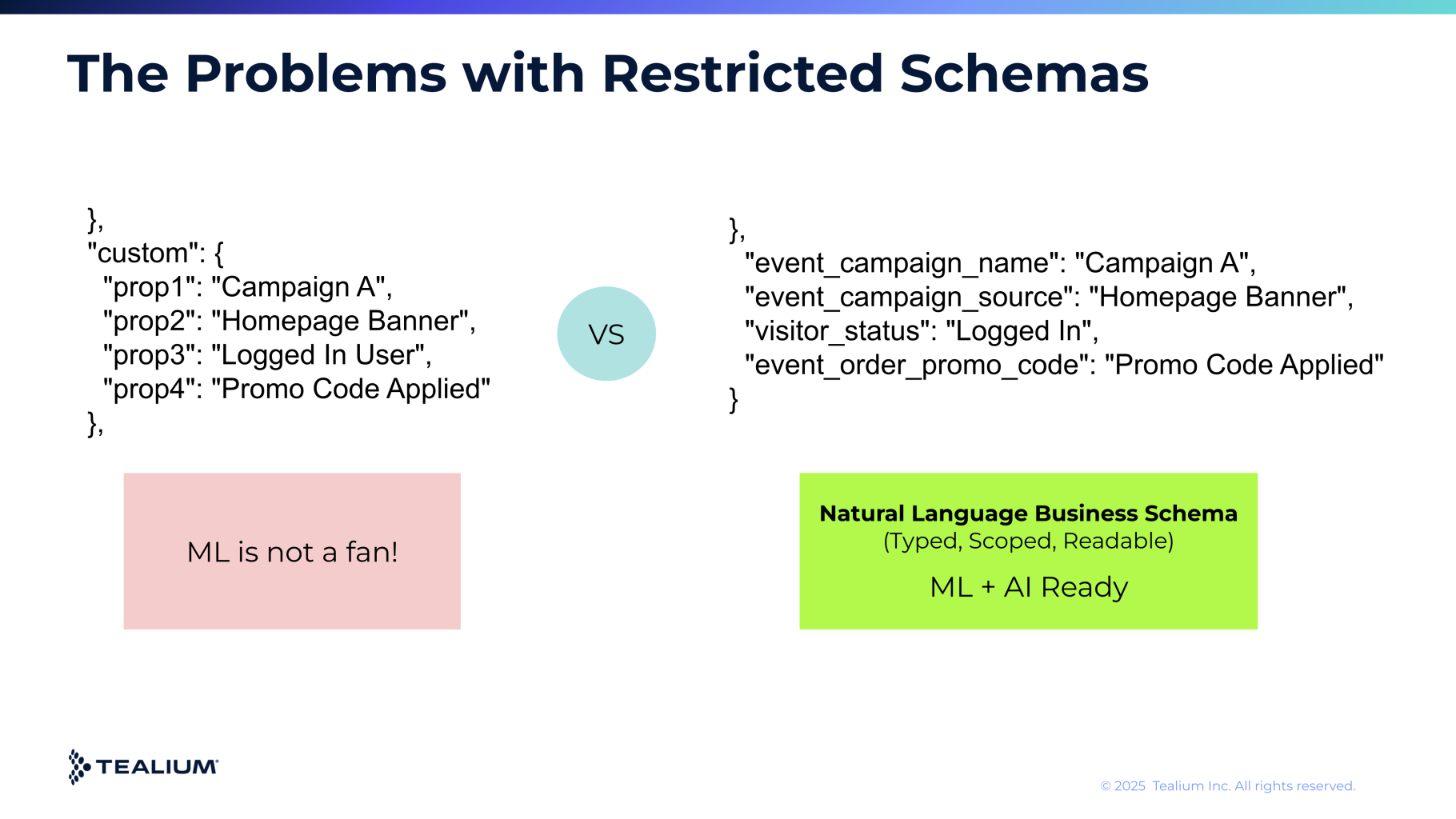

- Inherit messy, inconsistent naming with rigid schemas that confuse business users, slow down decision-making, and add unnecessary friction for AI models.

In other words, you’re building your data strategy on the legacy reporting tool’s terms, not on your business’s terms.

Why Tealium for Data Streaming Is a Better Approach

1. Business-Friendly Data Layer

Tealium enables you to define customer data in clear business terms, standardized event names, attributes, and values that everyone can understand. Instead of prop6 or customDimension3, you have cart_add and loyalty_status. No decoder rings. No lookup tables.

The payoff? Data teams waste less time data wrangling, and AI models require less training because the data is already structured in meaningful, human- and computer-readable terms.

2. Low-Latency Data to Your Cloud

With Tealium’s Streaming APIs and fast data transfers, Tealium pushes data into Snowflake, Databricks, or any data cloud within seconds. That means analysts, marketers, and data scientists work on real-time data, not yesterday’s batch exports.

3. Real-Time Data Transformation

Raw data isn’t enough. Tealium’s streaming data layer allows you to transform data on the fly, enriching, normalizing, and preparing it before it even lands in your warehouse. This means downstream systems (BI, ML, personalization engines, and AI models) receive data that’s already fit for purpose, not a firehose of unstructured noise.

4. Filling the Data Gaps Across Touchpoints

Not every signal you need is available in your data warehouse today. Tealium fills those gaps with its flexible, robust data collection, capturing new data sets at the edge and unifying them into your unified data layer. From there, Tealium enriches, contextualizes, labels, and adds metadata to this data, allowing you to instantly blend these new, enhanced data sets with other sources in Snowflake, Databricks, or any data cloud to maintain a complete customer 360.

5. Future-Proofed Architecture

Instead of being boxed in by an analytics vendor’s UI, you own your raw, structured, and enriched data in your cloud warehouse of choice. That becomes your single source of truth, fueling BI dashboards, ML pipelines, personalization programs, and even analytics themselves, without compromise.

The Tealium Streaming Payoff: Speed, Clarity, and Control

- Speed: From collection to warehouse tables in seconds.

- Clarity: Business-terminology data model anyone can read… including your data teams and AI models

- Control: You decide what’s collected, how it’s named, and how it’s joined. Tealium handles the transformations to prepare the data for your downstream systems.

Bottom Line: Analytics platforms are for insights and answering questions. But if you use them as your data collection strategy, you’re undermining your data quality and limiting your AI future.

With Tealium’s streaming, your data is captured in your business’s language, enriched and transformed on the way in, and delivered in seconds, ready to fuel your data teams, AI, and every cloud-powered initiative.

Stop letting analytics tools dictate how you define your organization’s data. Own your data. Define it clearly. And get it into your AI models fast.