What does “real-time” mean to you? Less than a second? Ten seconds?

What about fifteen minutes?

Here’s the reality: many platforms that market themselves as real-time actually use micro-batching. They collect data into a bucket and process that bucket every 15 minutes. That’s a fundamentally different architecture being sold under the same label.

Getting a gate change alert 15 minutes after your flight moved isn’t a notification. It’s a headache, and let’s hope the overhead space isn’t full. Any CDP that micro-batches at 15-minute intervals isn’t real-time activation. It’s a delayed reaction with better branding.

Why Milliseconds Matter

When a website or mobile app loads, it triggers a cascade of request/response calls to multiple endpoints. These calls render the page, load images and styles, fire measurement and analytics trackers, and enable supporting services. How efficiently those requests are orchestrated determines performance, reliability, and the quality of data captured.

On the first page view, if files aren’t cached or the content has expired, the device reaches out to the server for the latest version. On subsequent requests, files pull from cache — no additional round trips needed unless explicitly triggered. That’s standard.

But personalization breaks the caching model. Dynamic content has to be requested the moment the experience loads. You can build known journeys with pre-configured personalization, but the moment AI enters the picture — where hundreds of possible outputs exist for a single interaction — static approaches start to fall apart. Systems running on micro-batched data won’t keep up. They can’t. The architecture won’t allow it.

600 Milliseconds: The Proof

We built a benchmark to show exactly what happens inside Tealium’s pipeline, step by step, with timestamps. You can run it yourself at financial.tealiumdemo.com/benchmark.html.

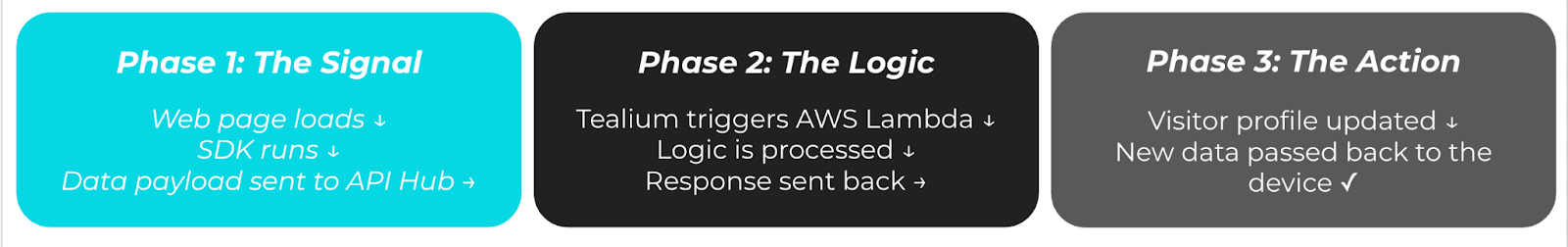

Here’s what the round trip looks like:

- Web page loads

- Tealium’s JS SDK loads

- Tealium Collect tag fires and sends the data payload

- Tealium’s API Hub collects the event and triggers an AWS Lambda request

- AWS Lambda function begins processing

- AWS Lambda outputs a response and triggers data back to Tealium

- Tealium Function receives the AWS Lambda response

- Tealium visitor profile is updated

- Updated visitor profile is passed back to the device

Visually, it looks like this:

Nine steps. One round trip to the cloud and back. Under one second.

In testing, we’ve seen total elapsed time as low as 600 milliseconds, with variance depending on how long the external vendor takes to process. To put that number in context: your brain recognizes a familiar face in about 600ms. That’s the speed at which Tealium completes a full data capture, cloud processing, and profile activation cycle. If your ‘real-time’ system takes 15 minutes to process and retrieve data, that’s like running into someone at the store, fumbling through an awkward conversation because you can’t place them, and only then remembering exactly who they were after driving away.

The AI Problem Nobody’s Talking About

Most AI models in production today run on historical data, completely disconnected from what’s happening in the current session. Outputs are generated in batch, written to files, and ingested later into downstream platforms. By the time those insights are activated, the moment has passed.

This forces every experience to be driven by delayed data rather than real-time intent. And intent is perishable. The ability to influence a decision to buy is measured in seconds, not minutes. When action happens after the session ends, you’re not engaging a customer — you’re retargeting a ghost.

The real breakthrough isn’t choosing between in-session behavior and historical context. It’s combining both within the same sub-second window. Most systems can do one or the other. Doing both in under 600 milliseconds is what changes the output.

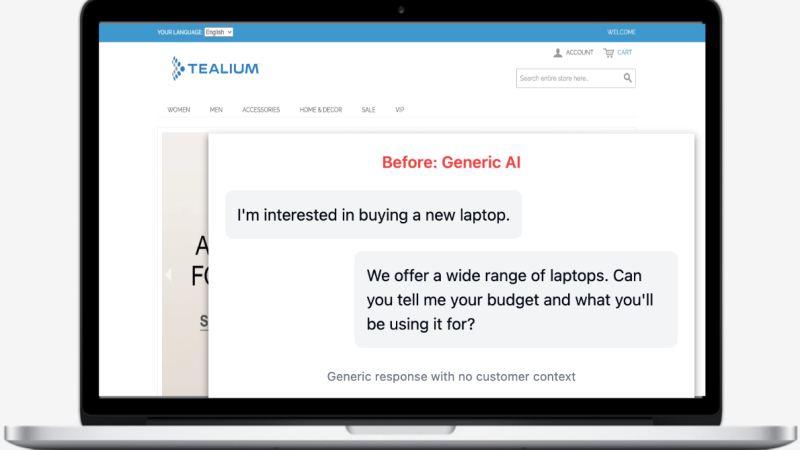

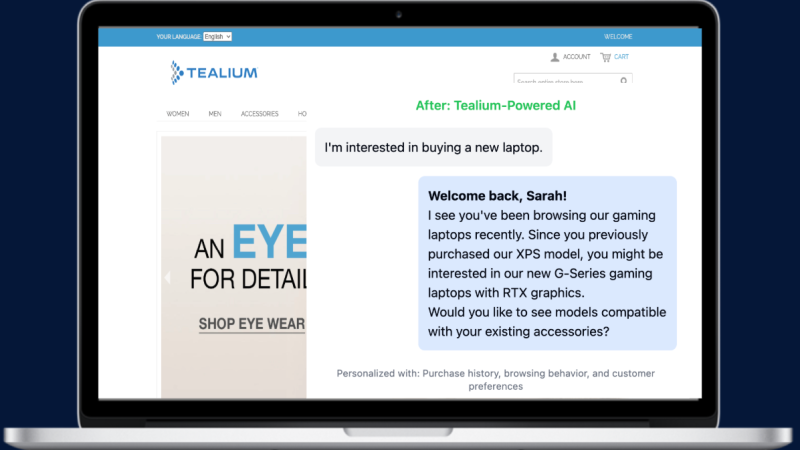

Consider this example: a chatbot. Without real-time data, your chatbot opens with a generic greeting regardless of whether the visitor just spent four minutes on your pricing page or arrived from a competitor comparison review.

With real-time profile data available at session start, that same chatbot opens with context: acknowledging what the visitor has been looking at, surfacing relevant information, skipping the discovery phase entirely. The interaction starts where the customer already is, not where the system assumes they might be.

This takes a business from reading a biography about a customer to actually shaking their hand. That’s not just a marginal improvement.

How do you do it?

Delivering this kind of responsiveness is an architectural commitment. You need true real-time data capture, sub-second decisioning, and activation that scales to millions of concurrent events — all happening within the session, not after it.

That’s what Tealium’s pipeline was built to do. And the benchmark is there to prove it.

If your organization is evaluating real-time capability, run the benchmark. Then let’s talk about what sub-second activation means for your specific use cases.